How to delete or exclude pages/files

It is possible to exclude pages in different ways, each with their own set of pros and cons. It is recommended to read and understand all the ways of excluding pages before setting up the exclusion logic to ensure the best setup for your needs.

How to delete pages from the index

Deleting pages from the crawlers index can be done manually from the Page inventory. You can read more about the Page inventory in this article.

To manually delete pages from the crawler’s index, follow these steps:

- Navigate to Configuration › Page Inventory in the menu.

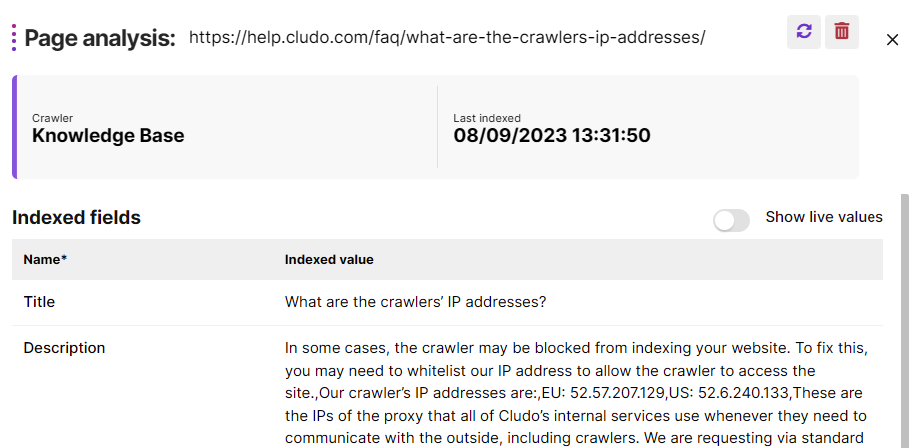

- Find and select the page you want to delete. This will open the Page Analysis. Ensure the page is associated with the correct crawler.

- Click the Trash icon in the top-right corner to delete the page.

How to exclude pages via the crawler

Crawler exclusions will prevent the crawler from ever indexing any pages that match the exclusion. Changes here affect all engines using the crawler in question.

- In the navigation, select Configuration › Crawlers.

- Select the crawler on the list that you would like to exclude pages for.

- To exclude based on specific URLs:

- Under Excluded from the crawl, enter a URL in the Pages field. You can use both absolute and relative URLs.

- Click the plus icon.

- To exclude based on a URL regular expression:

- Under Excluded from the crawl, enter a regular expression in the URL regex field.

- Click the plus icon.

- Click the Save Crawler button.

- All pages that match the exclusion(s) will now be ignored by the crawler. Any existing pages that match the exclusion(s) will be dropped from the index.

Example

A crawler is set up to exclude /login/. This will make the crawler ignore pages like:

clumobile.com/login/my_profile

clumobile.com/login/my_subscription

However, a page like clumobile.com/login will still be crawled as it does not end with “/”.

Example

A crawler is set up with a URL regex of “product\/\d{3}$”. This will exclude any product page where the page is exactly 3 numbers, such as:

clumobile.com/products/123

clumobile.com/products/456

clumobile.com/products/321

However, any product page with more or less than 3 numbers in the URL would still be crawled like:

clumobile.com/products/12

clumobile.com/products/1234

clumobile.com/products/12345

How to exclude pages via the engine settings (engine filters)

Excluding pages via the engine will prevent pages from being shown in the engine’s results. It will only affect search engines, and only the single engine that it is being configured for.

- In the navigation, select Configuration, then Engines.

- Select the engine to exclude pages for in the list.

- Under Advanced -> Pre-filter search results, Click the Add New Filter button.

- Select a crawled field to filter on in the dropdown.

- Type a field value in the field below the dropdown. Note that this value is case-sensitive and must reflect exactly what has been crawled.

- Click the plus icon.

- Optional: To add more field values, repeat the last two steps.

- Optional: To add more filters, repeat the last five steps.

- Click the Save Engine button.

Example

An engine is set up with a filter for the field “Category” to match “Blog”. This will limit the shown results for the engine to only show pages where the category is “Blog”.

How to exclude pages via the Excluded pages tool

Excluding pages via the excluded pages feature allows for specific pages to be removed from the search results of an engine. It will only affect search engines and only the single engine that it is being configured for.

- In the navigation, select Configuration, then Excluded pages.

- Select the engine to exclude pages for from the drop-down list.

- Click the New button.

- Choose to exclude pages by title or URL in the Search Pages By radio button.

- Insert the title or URL of the page to exclude in the Find page field.

- For the correct result in the list, click the plus icon.

- Optional: to add more pages repeat the previous three steps.

- Click the Save button.

- The selected page(s) are now added to the list of pages to be excluded from the search and will stay excluded until removed from the list.

Example

An engine is set up to exclude the blog overview page, leading the user to only find individual blog posts among the results.

How to exclude pages via canonicalization

Excluding pages via a canonical tag will prevent pages from being indexed because the content on the page also exists on a different URL. It will affect all search engines including external search engines like Google. Read more on canonicalization here.

Setting up canonical tags does not happen in MyCludo, but rather in the HTML of a page. The canonical tag must point to the URL of a different page to cause the page to be ignored.

Example

When interacting with the pagination of a blog page of a site, it generates a new URL, e.g. clumobile.com/blog/?p=1, clumobile.com/blog/?p=2, and so forth. We do not want any search engines to index these pages, but only the first page, clumobile.com/blog/.

To achieve this, a canonical tag is added to direct to the original /blog page:<link rel="canonical" href="https://clumobile.com/product/blog" />

With this canonical tag, the /?p= pages will not be indexed, but the /blog page will be.

How to exclude pages via noindex tags

Excluding pages via the noindex tag will prevent the page from being indexed at all. It will affect all search engines including external search engines like Google.

Setting up noindex tags is not done in MyCludo, but rather in the HTML of a page. A meta tag named “robots” must be set with the content value of “noindex” as shown here:

<meta name="robots" content="noindex">

Most CMS’es will have the option to exclude a page from search, which will inject a noindex tag on the page in question.

How to exclude pages via robots.txt rules

It is possible to exclude pages or entire areas of a site by leveraging robots.txt rules. The rules are flexible, so it is possible to make specific rules for Cludo, or make global rules which will also affect external search engines like Google and Bing.

Setting up the robots.txt file is not done in MyCludo, but rather in the hosting of the website. The file must follow a specific format, where one or more search agents can be addressed with certain rules for what must be crawled by that agent. Read more about robots.txt here.

Example of Robots.txt

User-agent: Googlebot

Disallow: /employee/

User-agent: cludo

Allow: /

User-agent: *

Disallow: /internal/

In the example above, the Google search engine (Googlebot) is not allowed to crawl any URL containing /employee/. Cludo’s user agent (cludo) is allowed full access to crawl any URL it can find on the site. Any search engine (*), including both Google or Cludo is not allowed to crawl any URL that contains /internal/.